Andrew H. Liu

Google Research and Machine Intelligence

What IS Machine Learning?

I'm interested in solving challenging vision, graphics, and representation learning problems.

What IS Machine Learning?

I'm interested in solving challenging vision, graphics, and representation learning problems.

We introduce the problem of perpetual view generation—long-range generation of novel views corresponding to an arbitrarily long camera trajectory given a single image. This is a challenging problem that goes far beyond the capabilities of current view synthesis methods, which work for a limited range of viewpoints and quickly degenerate when presented with a large camera motion. Methods designed for video generation also have limited ability to produce long video sequences and are often agnostic to scene geometry. We take a hybrid approach that integrates both geometry and image synthesis in an iterative render, refine, and repeat framework, allowing for long-range generation that cover large distances after hundreds of frames. Our approach can be trained from a set of monocular video sequences without any manual annotation. We propose a dataset of aerial footage of natural coastal scenes, and compare our method with recent view synthesis and conditional video generation baselines, showing that it can generate plausible scenes for much longer time horizons over large camera trajectories compared to existing methods.

We present a framework for automatically reconfiguring images of street scenes by populating, depopulating, or repopulating them with objects such as pedestrians or vehicles. Applications of this method include anonymizing images to enhance privacy, generating data augmentations for perception tasks like autonomous driving, and composing scenes to achieve a certain ambiance, such as empty streets in the early morning. At a technical level, our work has three primary contributions: (1) a method for clearing images of objects, (2) a method for estimating sun direction from a single image, and (3) a way to compose objects in scenes that respects scene geometry and illumination. Each component is learned from data with minimal ground truth annotations, by making creative use of large-numbers of short image bursts of street scenes. We demonstrate convincing results on a range of street scenes and illustrate potential applications.

We propose a learning-based framework for disentangling outdoor scenes into temporally-varying illumination and permanent scene factors. Inspired by the classic intrinsic image decomposition, our learning signal builds upon two insights: 1) combining the disentangled factors should reconstruct the original image, and 2) the permanent factors should stay constant across multiple temporal samples of the same scene. To facilitate training, we assemble a city-scale dataset of outdoor timelapse imagery from Google Street View, where the same locations are captured repeatedly through time. This data represents an unprecedented scale of spatio-temporal outdoor imagery. We show that our learned disentangled factors can be used to manipulate novel images in realistic ways, such as changing lighting effects and scene geometry.

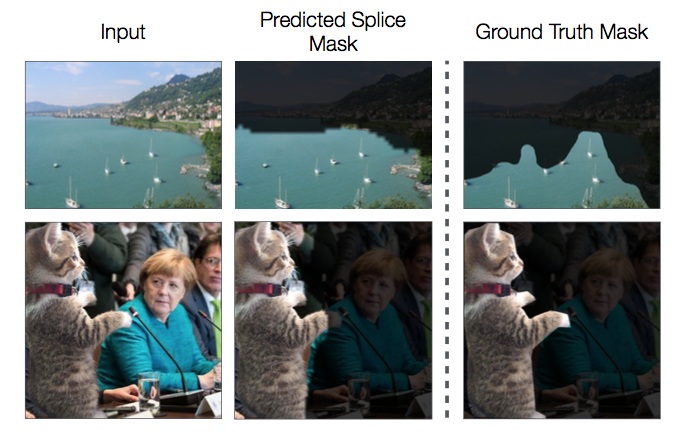

Advances in photo editing and manipulation tools have made it significantly easier to create fake imagery. Learning to detect such manipulations, however, remains a challenging problem due to the lack of sufficient training data. In this paper, we propose a model that learns to detect visual manipulations from unlabeled data through self-supervision. Given a large collection of real photographs with automatically recorded EXIF metadata, we train a model to determine whether an image is self-consistent — that is, whether its content could have been produced by a single imaging pipeline. We apply this self-supervised learning method to the task of detecting and localizing image splices. Although the proposed model obtains state-of-the-art performance on several benchmarks, we see it as merely a step in the long quest for a truly general-purpose visual forensics tool.

We used Siamese Networks to learn feature encoders for objects that are invariant to pose changes and apperances. By using Kalman filtering, we are able to generate object tracks based on similarity scores.

Undergraduate final project for CS 194-26. Used SIFT Flow algorithm to project images onto an actively deforming surface.